This week, two high-profile instances of government entities using facial recognition technology to identify individuals in photos or video footage hit the press. In Washington, Utah, and Vermont, Immigration and Customs Enforcement (ICE) agents have used photos from drivers’ license databases in attempt to identify undocumented immigrants—who legally obtained driver’s licenses at the urging of their state governments. Similarly, it was revealed that footage taken from cameras installed across the city of Detroit to deter crime was being analyzed with facial recognition technology to identify possible suspects by comparing images captured against drivers’ license and mugshot databases.

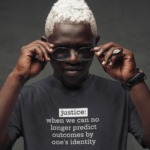

It can certainly be argued whether these tactics are ethical to begin with—does this represent a government invasion of personal privacy? Many would deem the specific applications of the technology in these situations unethical, while others may object more broadly to government surveillance—which has taken place without being widely publicized, in the case of Detroit, or secretly, in the case of ICE. Yet, neither of these concerns address the most insidious problem with facial recognition software: it is rife with racial bias.

As Detroit software engineer George Byers II explains, “Facial recognition software proves to be less accurate at identifying people with darker pigmentation. We live in a major black city. That’s a problem.” In the case of ICE, Alvaro Bedoya, the founding director of Georgetown Law’s Center on Privacy & Technology summarizes the problem succinctly: “The question isn’t whether you’re undocumented — but rather whether a flawed algorithm thinks you look like someone who’s undocumented.”

This phenomenon, called “algorithmic bias” has been widely documented. A study published in January found that, unless explicitly calibrated for African American faces, facial recognition software has a significantly higher “false match” rate for African Americans (and that, if calibrated for African American faces, becomes less accurate in recognizing white faces.) Earlier this year, another study found that autonomous vehicles were 5% less likely to detect pedestrians of color than white pedestrians. Last month, in the midst of these discoveries, Axon, the largest producer of police body cameras, announced that it would not be producing facial recognition technology in the near future due to concerns about accuracy in the context of law enforcement.

“Algorithmic bias” has been widely documented. A study published in January found that, unless explicitly calibrated for African American faces, facial recognition software has a significantly higher “false match” rate for African Americans Share on XHow can technology be biased? The answer lies in its human creators. Technology must be programmed by humans who are, by nature, biased and fallible. When engineers program artificial intelligence, if the technology is not exposed to enough examples of people of color, it will fail to perform accurately when it encounters people of color in the real world. Unfortunately, volunteers whose faces are used in the training period are, too often, disproportionately white—in part because white project managers are likely not to have been socialized to recognize a lack of representation of people of color as a potential problem.

As these recent examples have illustrated, the consequences of such oversights can be extremely dangerous to innocent individuals, even in circumstances where the technology is seemingly a positive development (like autonomous vehicles)—let alone those where the application is much more personal and ethically questionable.

At a bare minimum, we owe it to everyone to stop using facial recognition technology in all potentially life-changing or life-threatening applications until it is significantly improved and free of racial bias. Even then, questions remain about whether such a flawed technology is appropriate in these instances, and whether it constitutes an unacceptable breach of privacy. Get up-to-date on the debate over facial recognition technology here.

At a bare minimum, we owe it to everyone to stop using facial recognition technology in all potentially life-changing or life-threatening applications until it is significantly improved and free of racial bias. Share on X