Perception is a powerful thing. It is the foundation of our experience of the world — how we see it, understand it, feel it, recognize it, and process it. Our perception is based on our culture, our history, our circumstances, and creates the lens through which we see the world and the stories we tell ourselves about it. But can we trust what we see?

It used to be that many people could, with more certainty, recognize what information was real from something artificially constructed. I remember grocery shopping as a kid and seeing sensational tabloids in the checkout line with headlines like, “Batwoman has 35th child!” or “I was abducted by aliens — again!” The pictures on the tabloids were, often, laughably crude, and even as a child I was able to look critically at the paper and doubt its authenticity.

It’s harder now. What we see on social media can be altered by camera filters, deep fake video, and easy-to-access photo editing software. (Not to mention the number of people willfully spreading “fake news” and disinformation.) This all makes the truth more difficult to discern. Whether it is a personal post or a shared news story, it doesn’t necessarily matter if people are maliciously and intentionally misleading others, simply trying to construct an aspirational narrative of their lives that might fall short of reality, or earnestly sharing their truths. It can be overwhelming to try to discern what is real and interpret it through our own lens.

Information Overload

Even the most savvy among us may fail to critically examine messages we receive because we get so many every day. A 2008 study found that an average American mentally processes 34 GB of information from media consumption alone every day (equivalent to 12 straight hours).1 This is not counting information from actual human conversation. Consider this with the fact that, as humans, we have a limited capacity to focus our attention. (The average human attention span is 8.25 seconds and is shrinking.)

These realities work against our ability to have space and capacity to thoughtfully practice critical thinking while maintaining the pace of life we have become accustomed to. (Whether we should maintain that pace is another conversation!) This has a profound effect on our reactivity and our judgment. It is reshaping our world.

The upside of this is that the proliferation of social media and the ease of access has elevated and spread the voices of historically marginalized people and communities. People are able to tell their stories and be heard more easily than ever before. The downside is that stories of hatred, violence, bigotry, racism, sexism, and lies are also elevated and spread. It is easier than ever before to spread hate, ignorance, and fear and have it accelerated by shadowy algorithms and inequitable systems.

Just how far is the reach? The Pew Research Center found that 86% of adult Americans get their news digitally and 42% of Americans aged 18-29 get their news via social media. These social media platforms remain largely unregulated and operate on the whims of their corporate leadership. It’s a scary enough prospect before you even consider who those leaders are.

It’s Coming from Inside the House

Enter Elon Musk. As the new CEO of Twitter, he is the ultimate gatekeeper of what information is shared, who is allowed to share it, who is able to see it, and how much it costs. He is arguably one of the only gatekeepers left at Twitter since he dissolved the board of directors as one of his first official acts as CEO. Why does this matter? What is concerning about one man wielding so much power over the news we consume?

Tesla, the company Musk is best known for, is facing at least 10 lawsuits alleging overwhelming systemic racial and sexual harassment. At least seven women alone are suing for sexual harassment. SpaceX, his other company, paid one of Musk’s accusers $250,000 in severance over his alleged sexual misconduct.

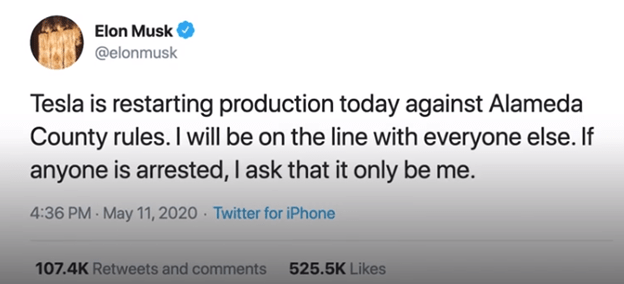

Musk is now nearly single-handedly in charge of what is accepted content on Twitter. We can make inferences into his decision-making abilities, ethics, morals, biases, and prejudices based on this behavior. We can also base it on his Tweets. In referencing the COVID pandemic:

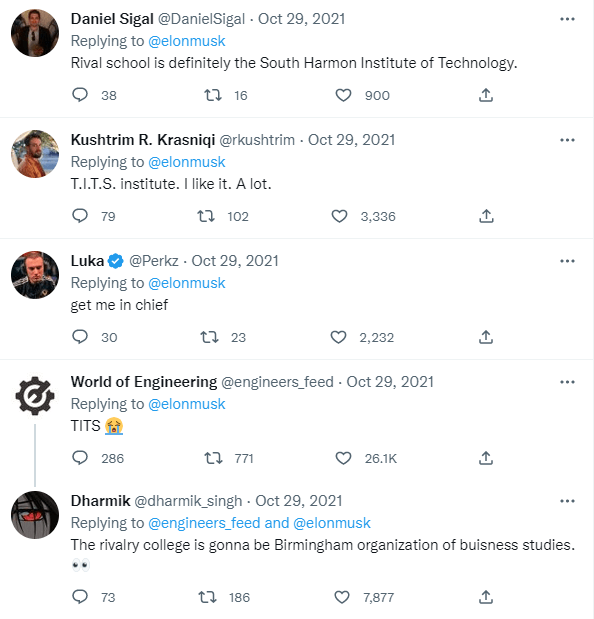

Musk’s take on comedy and women:

The thread continued:

Musk was the leader of (at the time) two tech companies and still decided to tweet this tasteless, sexist joke that perpetuated the pervasive sexism and misogyny in tech and set off a firestorm of other men joining in to devalue women.

Musk’s disregard for the impact of his words and behavior on historically marginalized groups and cavalier attitude toward the truth make it incredibly concerning that he is now the person in charge of the fifth most popular social media platform in the U.S., with over 42.4% of adults using the platform.

What’s at Stake?

One of Musk’s main complaints about Twitter has been that it “censors” speech and that there was a preponderance of fake bots perpetuating fake news. In fact, he leveraged that concern in an unsuccessful attempt to back out of the deal to purchase Twitter. Let’s look at the numbers:

Twenty-five percent of Twitter users produce 97% of tweets posted. It’s been found that during the 2016 election cycle, 1% of Twitter users were exposed to 80% of the fake news on the platform; however, those users who were exposed tweeted on average of 71 times per day which is considered a “power user.” (The average Twitter user tweets 2.6 times per day. Elon Musk tweets an average between 6-25 times per day.)

Twitter’s SEC filings estimated there were around 5% of bots on the platform. To be fair, not all bots are bad. Some newspapers have bots that auto-tweet headlines to new articles. Other bots automatically report sports scores. Bots, in and of themselves, are not bad. They publish the tweets they are programmed to publish. The real concern is the moderation of the Twitter platform as a whole. Whether human or bot, the only way to ensure veracity of social media content is to monitor and moderate it — a concept completely anathema to a “free-speech absolutist” like Musk.

The Reality of Harm

The reality is: Unmonitored speech on Twitter is highly problematic and disproportionally impacts women and BIPOC. Amnesty International found that 1 in 10 tweets mentioning Black women or journalists was abusive. The Pew Research Center found that 1 in 5 adult Twitter users experienced harassment or abuse on the platform. In general, unmoderated spaces online (4chan, 8chan) are refuges for extremism, intolerance, hate, and violence — not only because of the lack of moderation, but also because there is little fear of repercussions or accountability. In the first 12 hours after Musk’s Twitter purchase was complete, hate speech increased 5,588% and had the potential to reach over 3 million people. This legitimized the fears of many Twitter users that the platform was going to rapidly devolve.

In an ironic twist in the pursuit of truth and free speech, one of Musk’s first major initiatives (after mass layoffs and dissolving the board of directors) was to allow users with an Apple ID and phone number to purchase a verified status on the platform for $8 per month. Previously, users or brands had to go through a free, yet more thorough verification process. Users made fake accounts impersonating famous individuals and corporations almost immediately. The verification feature was put on hold almost as quickly as it was implemented. But not before:

- A fake Eli Lilly account tweeted that they were going to begin dispensing insulin for free. Investors saw the tweets and Lilly stock tanked 4.5% practically overnight. The tweet also caused the shares of competitive drug makers to tank as well.

- Lockheed Martin, a weapons manufacturer, lost billions when a fake tweet announced that they would stop selling weapons to countries until human rights abuses were investigated.

This shows the impact that a simple tweet has on global economics at the macro level. Imagine the impact and harm created when unmoderated, violent, and hateful tweets flow unchecked at the micro-level. The impact may be even greater on the micro level as repeated racist, bigoted, and sexist traumas harm everyone and such bigotry becomes increasingly normalized.

Activists used the new verification feature on Twitter to expose the perils of the way capitalism prioritizes profits over people and to lift up how volatile, reactive, and easily manipulated the foundation of the American economy is. All of this was done within the rules and boundaries set forth by Musk in the Twitter policies and can be viewed as acts of resistance against someone who epitomizes greed, inequity, and unsustainability.

This form of activism is powerful because, for once, those in positions of power are experiencing what it feels like to be targeted online. However, it does not address or repair the harm marginalized people experience daily or break down the systems that continue to perpetuate the harm.

What Can We Do?

Sometimes it feels hopeless to be a marginalized person navigating online space. Laws and regulations have not kept up with the pace of technology. Many of those in positions of political power do not understand the technology, nor the implications or impact of it. Many other politicians object to the idea of any type of content moderation. Here are some things you can do:

- Protect yourself and your peace. Curate your online niche to be full of those who support you, educate you, inspire you, and lift you up. Never feel obligated to explain or defend yourself to trolls. Block, ban, and report harm early and often. Don’t let people goad you into thinking this constitutes an “echo chamber.” People who want to spread hate and ignorance fume when they are not allowed platforms, because they feel entitled to our space and time. They are not. You will never change hearts, minds, and behaviors in the comments.

- Don’t feel obligated to have a presence on every social media platform. Find your people and settle in there. I never felt at home on Twitter because, to me, it felt like thousands of people screaming into the void without any genuine connection or dialogue. (If that’s your thing, or not your Twitter experience, that’s cool!) Give yourself permission to leave spaces that feel unsafe or that you don’t connect with. Spend your time where your people are.

- If your identities fall within dominant groups, use your power and voice to call out harmful posts and behavior. I cannot stress enough how much of an impact this has in rejecting the normalization of bigotry. By declaring hateful, racist, and sexist comments and policies unacceptable, you are you using your power to create change. If you are an investor, or work in a position of power — speak up. Do not stay silent.

- Remember, the CEOs of nearly all major social media platforms are white men. (Twitter, Facebook, Instagram, Pinterest, LinkedIn, Snapchat, and Reddit are all led by white men. YouTube is led by a white woman. TikTok is led by a Singaporean man.) These men are the gatekeepers and ultimately responsible for how the platform runs — how they handle threats, racism, hate speech, misogyny, violence; how they decide which algorithm to use to curate the news and information you see, how they handle complaints of misconduct, and how they handle your data. We have to remain aware of the lens through which they are viewing their work and the influence it has on our digital experience and the information we consume.

Moving On

Instead of tabloid headlines like “Tourist Knocks Over Tower of Pisa” or “Talking Cat Gets Job” we are now used to seeing tweets about “stolen elections.” Just as I would walk by the tabloids at the supermarket, it’s a good time to walk away from Twitter. The Winters Group decided to exit. Admittedly, every major social media platform has problematic elements, and we decided Twitter was not a platform for meaningful engagement with our followers. Still, we could not in good conscience support the direction Elon Musk was taking Twitter and the willful and intentional disregard for marginalized communities.

We will continue to be on the lookout for up-and-coming platforms that are led by more inclusive voices and prioritize equity and diversity. It will be a beautiful thing to see the connection, conversation, and diversity of thought in a platform that moderates content to create space for psychological safety, harm reduction, and the freedom to exist in an online space where the uniqueness and humanity of every user is recognized and celebrated. Until this becomes reality, I will curate my online spaces in my best approximation in the effort to manifest it into being.

Have you found a new online space that centers DEIJ? Let me know in the comments!